1..2…3…Freeze….Peak

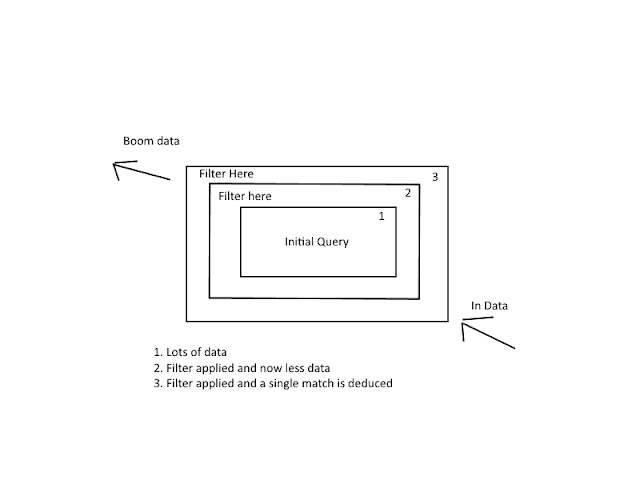

I haven’t written anything for ages as I’ve been the busiest I have ever been at my current employer – a large E-Commerce Website - as a result of preparation (planned and unplanned) for its largest ever “Peak” weekend, my company’s name for the Black Friday weekend. Sorry this is a long read so grab a coffee or skip to the Summary, or don't read it! I’ve started writing this on the train coming back from London where I was required to provide extra-support for the Payments Platform (Domain) - where I ply my trade – a group of Microservices and Legacy components supporting Payment processing for the Website and sitting very closely to the Orders Domain. I expected to be bleary eyed and wired to the hilt, full of coffee and adrenaline as me and my colleagues worked like mad to pull out all the stops to make our giant incredible machine work properly. But no, as our CIO put it, everything just worked (well 99% of the time – more on this later). The freeze